First Name:

Joseph Last Name:

LiMentor:

Dr. Mark V. AlbertAbstract:

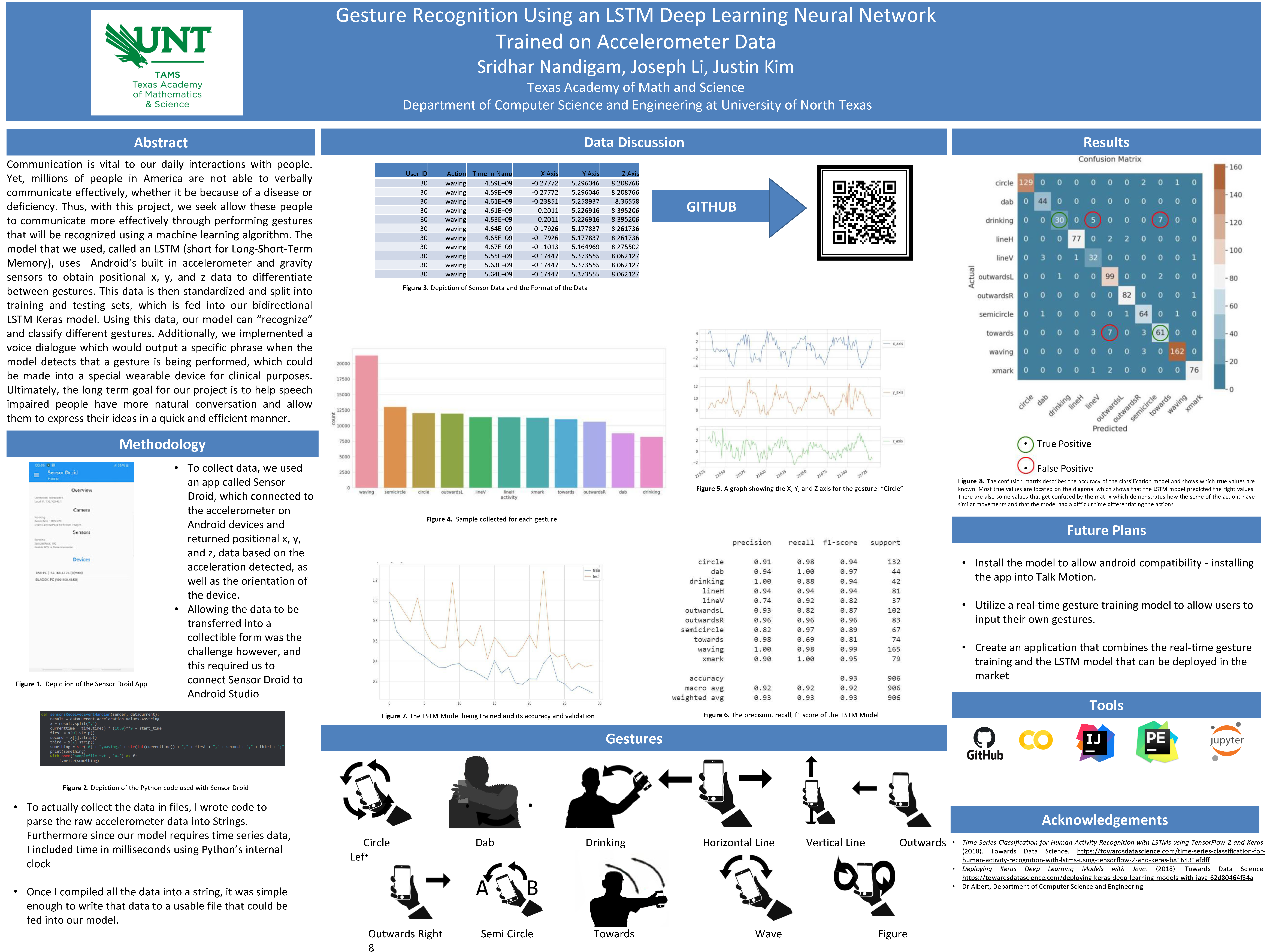

People all around the world suffer from the inability to verbally communicate or use sign language efficiently. For this purpose, the gesture recognition effort uses LSTM’s, a type of recurrent neural networks that are typically used for time series data, such as accelerometer data. The LSTM model uses the Android’s built-in accelerometer and gravity sensors to detect and differentiate between different gestures. By collecting the x-axis, y-axis, and z-axis data, the model classifies each gesture into its own category. It is then standardized, splint into training and testing data, and input into the bidirectional LSTM Keras model. By classifying each gesture, the model can predict each gesture based on real time coordinates of the accelerometer. This methodology allows a machine learning approach to recognize gestures and implement voice dialogue that could be

implemented into a specially made wearable device for clinical use in the future. As such, the long term goal of this effort is to help speech impaired people have more natural conversation and allow them to express their ideas in a quick and efficient manner.Poster:

Year:

2021